Microsoft recently released their new SRE Agent, and they posted this article introducing it. I haven’t yet tested the SRE Agent myself, but I feel that I have a good idea of what to expect just from looking closely at the article.

It starts with a quick demo video showing what the agent can do (direct YouTube link). This is actually somewhat rare in articles about AI tools for SRE. The vast majority of such articles go on at length about how useful their AI tool is, but they lack something very critical to help us decide whether it’s worth our time to try it out: real-world examples. I’ve written about this before (AIOps: Prove it!), and it’s the reason why you won’t see many articles of this sort in SRE Weekly.

This time though, we have an example interaction with Azure’s AI SRE agent. Let’s check it out!

The agent Investigates

In the video, the user asks the AI why their app is having connection issues. The agent dives right in and starts pulling up graphs for the service. The video scrolls through a couple of graphs and the agent’s commentary quickly, including this one:

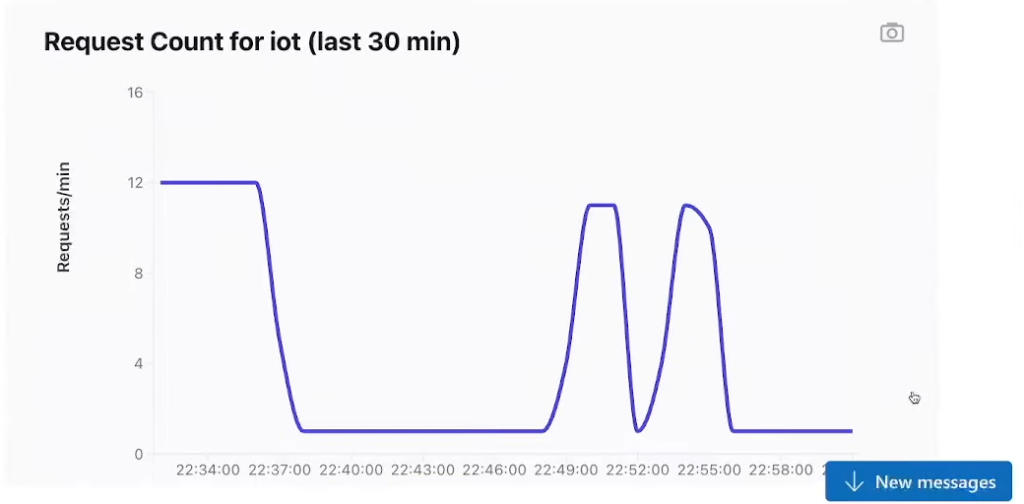

Request pattern for iot. Notice the drop and recovery in request volume.

I’ve charted the request and memory metrics for iot. Memory usage is consistently low (around 2-3%), and there was a noticeable drop in request volume, followed by recovery. Next, I’ll check the logs for the latest revision to identify any error patterns or connectivity issues.

Azure SRE Agent (source: Azure’s video)

The agent says that the request rate dropped and then recovered. In fact, according to the graph it produced, the request rate dropped to near-zero, briefly went back up and down a couple of times, and then stayed down.

Right out of the gate, the AI has drawn an obviously incorrect conclusion from the data that it presented. Is it then basing the rest of its investigation on this erroneous conclusion?

The agent finds a bad security group rule

The agent goes on to examine the logs, finds nothing interesting, and then jumps to investigating security group rules. It finds a suspicious rule that it claims prevents the app from reaching its Redis database and jumps right to removing the rule: “I will remove this rule to restore connectivity and then verify the app’s health”.

This is surprising for a couple of reasons. First of all, why did the agent jump right to security group rules? It says, “Next, I’ll check for any network security group (NSG) rules that might be blocking connections, especially to Redis or other dependencies”. Sure enough, that’s exactly what it found.

How would the agent know to check for a rule blocking Redis? Why does such a rule exist? Surely it can’t have been there the whole time, since the app was functioning up until recently. Presumably the NSG rule was just added—perhaps to test the agent?

How did this NSG rule cause traffic drop and recover a couple of times? For that matter, how does a failure to connect to a backend datastore cause front-end requests to drop? Presumably the backend failure could cause browser-side scripts to error out and stop sending more requests, but this isn’t exactly clear, and I’m especially unclear on whether the AI agent “thought” of that. Also, isn’t this an IoT app? Does it even have browser-side scripts?

The agent takes action

Equally surprising is the fact that the agent immediately started to take action. This all stems from a simple question about connectivity issues, and the user gave no indication that they wanted the agent to fix the problem. Thankfully, the agent does seem to require human approval before actually executing its planned action.

Even still, there’s cause for concern here. The agent seems to be geared toward pushing actions on the user, and it’s very easy to click that “Approve” button. Could an operator with less experience perhaps trust an agent’s reasoning for performing a dangerous operation and click “Approve”? This is especially concerning when we’ve already seen an example of flawed reasoning on the part of the agent.

My impression

The article goes on to list the kinds of questions you can ask the agent, with example output. This is where I start to see the value in a tool like this. It seems like one can ask Azure’s SRE agent questions about their infrastructure and quickly get back detailed answers, with graphs. I can see the potential for that to save operators a ton of time.

Still, based on Microsoft’s own example interaction in their video, I have significant reservations about their SRE Agent. If this is the example they chose to share with us, what does that say?

Comments are closed here.